Xin Weng

SegRap2025: A Benchmark of Gross Tumor Volume and Lymph Node Clinical Target Volume Segmentation for Radiotherapy Planning of Nasopharyngeal Carcinoma

Jan 28, 2026Abstract:Accurate delineation of Gross Tumor Volume (GTV), Lymph Node Clinical Target Volume (LN CTV), and Organ-at-Risk (OAR) from Computed Tomography (CT) scans is essential for precise radiotherapy planning in Nasopharyngeal Carcinoma (NPC). Building upon SegRap2023, which focused on OAR and GTV segmentation using single-center paired non-contrast CT (ncCT) and contrast-enhanced CT (ceCT) scans, the SegRap2025 challenge aims to enhance the generalizability and robustness of segmentation models across imaging centers and modalities. SegRap2025 comprises two tasks: Task01 addresses GTV segmentation using paired CT from the SegRap2023 dataset, with an additional external testing set to evaluate cross-center generalization, and Task02 focuses on LN CTV segmentation using multi-center training data and an unseen external testing set, where each case contains paired CT scans or a single modality, emphasizing both cross-center and cross-modality robustness. This paper presents the challenge setup and provides a comprehensive analysis of the solutions submitted by ten participating teams. For GTV segmentation task, the top-performing models achieved average Dice Similarity Coefficient (DSC) of 74.61% and 56.79% on the internal and external testing cohorts, respectively. For LN CTV segmentation task, the highest average DSC values reached 60.24%, 60.50%, and 57.23% on paired CT, ceCT-only, and ncCT-only subsets, respectively. SegRap2025 establishes a large-scale multi-center, multi-modality benchmark for evaluating the generalization and robustness in radiotherapy target segmentation, providing valuable insights toward clinically applicable automated radiotherapy planning systems. The benchmark is available at: https://hilab-git.github.io/SegRap2025_Challenge.

Joint Left Atrial Segmentation and Scar Quantification Based on a DNN with Spatial Encoding and Shape Attention

Jun 23, 2020

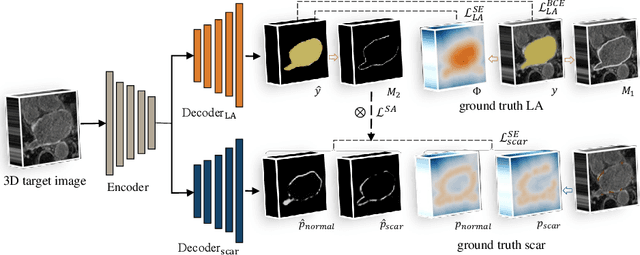

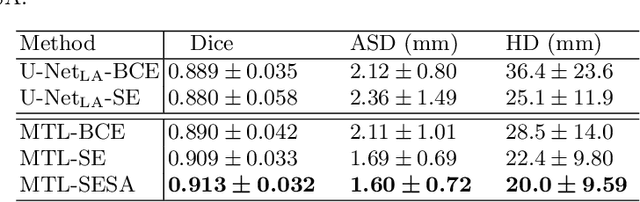

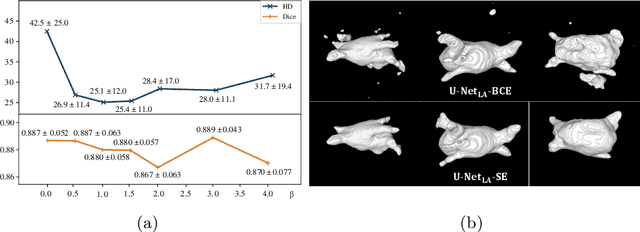

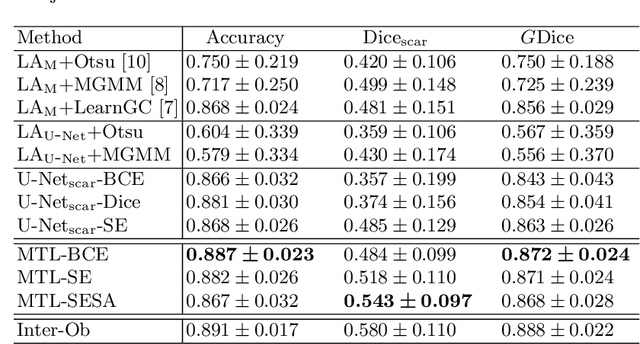

Abstract:We propose an end-to-end deep neural network (DNN) which can simultaneously segment the left atrial (LA) cavity and quantify LA scars. The framework incorporates the continuous spatial information of the target by introducing a spatially encoded (SE) loss based on the distance transform map. Compared to conventional binary label based loss, the proposed SE loss can reduce noisy patches in the resulting segmentation, which is commonly seen for deep learning-based methods. To fully utilize the inherent spatial relationship between LA and LA scars, we further propose a shape attention (SA) mechanism through an explicit surface projection to build an end-to-end-trainable model. Specifically, the SA scheme is embedded into a two-task network to perform the joint LA segmentation and scar quantification. Moreover, the proposed method can alleviate the severe class-imbalance problem when detecting small and discrete targets like scars. We evaluated the proposed framework on 60 LGE MRI data from the MICCAI2018 LA challenge. For LA segmentation, the proposed method reduced the mean Hausdorff distance from 36.4 mm to 20.0 mm compared to the 3D basic U-Net using the binary cross-entropy loss. For scar quantification, the method was compared with the results or algorithms reported in the literature and demonstrated better performance.

* 10 pages

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge